Smalltalk80LanguageImplementation:Chapter 21: Difference between revisions

Onionmixer (talk | contribs) (내용수정) |

Onionmixer (talk | contribs) (오타수정) |

||

| (One intermediate revision by the same user not shown) | |||

| Line 34: | Line 34: | ||

===Introductory=== | ===Introductory Examples=== | ||

Two examples of sample spaces are given here before entering into a detailed description of the Smalltalk-80 classes. Suppose the sample space is the possible outcomes of a toss of a die. The sample space consists of | Two examples of sample spaces are given here before entering into a detailed description of the Smalltalk-80 classes. Suppose the sample space is the possible outcomes of a toss of a die. The sample space consists of | ||

| Line 141: | Line 141: | ||

self subclassResponsibility | self subclassResponsibility | ||

distribution: aCollection | distribution: aCollection | ||

"This is the cumulative distribution function. The argument is a range of contiguous values of the random variable. The distribution is | "This is the cumulative distribution function. The argument is a range of contiguous values of the random variable. The distribution is mathematically the area under the probability curve within the specified interval." | ||

self | self subclassResponsibility | ||

private | private | ||

inverseDistribution: x | inverseDistribution: x | ||

| Line 479: | Line 479: | ||

is a number between 0 and 5, answering the question, how many heads did I get in 5 trials? | is a number between 0 and 5, answering the question, how many heads did I get in 5 trials? The message | ||

The message | |||

<syntaxhighlight lang="smalltalk"> | <syntaxhighlight lang="smalltalk"> | ||

| Line 579: | Line 578: | ||

The Poisson law describes the probability that exactly x events occur in a unit time interval, when the mean rate of occurrence per unit time is the variable mu. For a time interval of dt, the probability is mu*dt; mu must be greater than 0.0. | The Poisson law describes the probability that exactly x events occur in a unit time interval, when the mean rate of occurrence per unit time is the variable mu. For a time interval of dt, the probability is mu*dt; mu must be greater than 0.0. | ||

The probability function is | The probability function is | ||

| Line 688: | Line 689: | ||

private | private | ||

inverseDistribution: x | inverseDistribution: x | ||

"x is a random number | "x is a random number between 0 and 1" | ||

↑startNumber + (x * (stopNumber - startNumber)) | ↑startNumber + (x * (stopNumber - startNumber)) | ||

setStart: begin toEnd: end | setStart: begin toEnd: end | ||

Latest revision as of 09:30, 13 July 2015

- Chapter 21 Probability Distributions

Probability Distribution Framework

Applications, such as simulations, often wish to obtain values associated with the outcomes of chance experiments. In such experiments, a number of possible questions might be asked, such as:

- What is the probability of a certain event occurring?

- What is the probability of one of several events occurring?

- What is the probability that, in the next N trials, at least one successful event will occur?

- How many successful events will occur in the next N trials?

- How many trials until the next successful event occurs?

Definitions

In the terminology of simulations, a trial is a tick of the simulated clock (where a clock tick might represent seconds, minutes, hours, days, months, or years, depending on the unit of time appropriate to the situation). An event or success is a job arrival such as a car arriving to a car wash, a customer arriving in a bank, or a broken machine arriving in the repair shop.

In the realm of statistics, the probability that an event will occur is typically obtained from a large number of observations of actual trials. For example, a long series of observations of a repair shop would be needed in order to determine the probability of a broken machine arriving in the shop during a fixed time interval. In general, several events might occur during that time interval. The set of possible events is called a sample space. A probability function on a sample space is defined as an association of a number between 0 and 1 with each event in the sample space. The probability or chance that at least one of the events in the sample space will occur is defined as 1; if p is the probability that event E will occur, then the probability that E will not occur is defined as 1 - p.

Sample spaces are classified into two types: discrete and continuous. A sample space is discrete if it contains a finite number of possible events or an infinite number of events that have a one-to-one relationship with the positive integers. For example, the six possible outcomes of a throw of a die constitute a discrete sample space. A sample space is continuous if it contains an ordered, infinite number of events, for example, any number between 1.0 and 4.0. Probability functions on each of these types of sample spaces are appropriately named discrete probability functions and continuous probability functions.

A random variable is a real-valued function defined over the events in a sample space. The adjectives "discrete" and "continuous" apply to random variables according to the characteristic of their range. The probability function of a random variable is called a probability distribution; the values in the range of the function are the probabilities of occurrence of the possible values of the random variable. The density is a function that assigns probabilities to allowed ranges of the random variable. Any function can be a density function (discrete or continuous) if it has only positive values and its integral is 1.

Another useful function that plays an important role in simulations is called the cumulative distribution function. It gives the probability that the value of the random variable is within a designated range. For example, the cumulative distribution function answers the question: what is the probability that, in the throw of a die, the side is 4 or less?

The mean is defined as the average value that the random variable takes on. The variance is a measure of the spread of the distribution. It is defined as the average of the square of the deviations from the mean.

Introductory Examples

Two examples of sample spaces are given here before entering into a detailed description of the Smalltalk-80 classes. Suppose the sample space is the possible outcomes of a toss of a die. The sample space consists of

event 1:1 is thrown

event 2:2 is thrown

event 3:3 is thrown

event 4:4 is thrown

event 5:5 is thrown

event 6:6 is thrown

Then, for this discrete probability distribution, the probability function for any event is

f(event) = 1/6

If X is a random variable over the sample space, then the probability distribution of X is g(X) such that

g(X=1) = f(event1) = 1/6, ... , g(X=6) = f(event6) = 1/6.

The density of X is 1/6 for any value of X.

The cumulative distribution function of X is

For example,

c(2,4) = g(X=2) + g(X=3) + g(X=4) = 1/6 + 1/6 + 1/6 = 1/2

As an example of a continuous probability distribution, let the sample space be the time of day where the start of the day is time = 12:00 a.m. and the end of the day is time = 11:59:59.99... p.m. The sample space is the interval between these two times.

The probability function is

where eventi < eventj. The density of X is

g(X = any specified time) = 0

Suppose this is a 24-hour clock. Then the probability that, upon looking at a clock, the time is between 1:00 p.m. and 3:00 p.m., is defined by the cumulative distribution function

g(X) is uniform over 24 hours. So

c(1:00,3:00) = c(1:00, 2:00) + c(2:00, 3:00) = 1/24 + 1/24 = 1/24.

Class ProbabilityDistribution

The superclass for probability distributions provides protocol for obtaining one or more random samplings from the distribution, and for computing the density and cumulative distribution functions. It has a class variable U which is an instance of class Random. Class Random provides a simple way in which to obtain a value with uniform probability distribution over the interval [0,1].

Like class Random, ProbabilityDistribution is a Stream that accesses elements generated algorithmically. Whenever a random sampling is required, the message next is sent to the distribution. ProbabilityDistribution implements next by returning the result of the message inverseDistribution: var, where the argument var is a random number between 0 and 1. Subclasses of ProbabilityDistribution must implement inverseDistribution: in order to map [0,1] onto their sample space, or else they must override the definition of next. The message next: is inherited from the superclass Stream.

| class name | ProbabilityDistribution |

| superclass | Stream |

| class variable names | U |

| class methods | class initialization

initialize

"Uniformly distributed random numbers in the range [0,1]."

U ← Random new

instance creation

new

↑self basicNew

|

| instance methods | random sampling

next

"This is a general random number generation method for any probability law; use the (0, 1) uniformly distributed random variable U as the value of the law's distribution function. Obtain the next random value and then solve for the inverse. The inverse solution is defined by the subclass."

↑self inverseDistribution: U next

probability functions

density: x

"This is the density function."

self subclassResponsibility

distribution: aCollection

"This is the cumulative distribution function. The argument is a range of contiguous values of the random variable. The distribution is mathematically the area under the probability curve within the specified interval."

self subclassResponsibility

private

inverseDistribution: x

self subclassResponsibility

computeSample: m outOf: n

"Compute the number of ways one can draw a sample without replacement of size m from a set of size n."

m > n ifTrue: [↑0.0].

↑n factorial / (n-m) factorial

|

In order to initialize the class variable U, evaluate the expression

ProbabilityDistribution initialize

Computing the number of ways one can draw a sample without replacement of size m from a set of size n will prove a useful method shared by the subclass implementations that follow.

Class DiscreteProbability

The two types of probability distributions, discrete and continuous, are specified as subclasses of class ProbabilityDistribution; each provides an implementation of the cumulative distribution function which depends on the density function. These implementations assume that the density function will be provided in subclasses.

| class name | DiscreteProbability |

| superclass | ProbabilityDistribution |

| instance methods | probability functions

distribution: aCollection

"Answer the sum of the discrete values of the density function for each element in the collection."

| t |

t ← 0.0.

aCollection do: [ :i | t ← t + (self density: i)].

↑t

|

Class ContinuousProbability

| class name | ContinuousProbability |

| superclass | ProbabilityDistribution |

| instance methods | probability functions

distribution: aCollection

"This is a slow and dirty trapezoidal integration to determine the area under the probability function curve y=density(x) for x in the specified collection. The method assumes that the collection contains numerically-ordered elements."

| t aStream x1 x2 y1 y2 |

t ← 0.0.

aStream ← ReadStream on: aCollection.

x2 ← aStream next.

y2 ← self density: x2.

[x1 ← x2. x2 ← aStream next]

whileTrue:

[y1 ← y2.

y2 ← self density: x2.

t ← t + ((x2-x1)*(y2+y1))].

↑t*0.5

|

In order to implement the various kinds of probability distributions as subclasses of class DiscreteProbability or ContinuousProbability, both the density function and the inverse distribution function (or a different response to next) must be implemented.

Discrete Probability Distributions

As an example of a discrete probability distribution, take the heights of a class of 20 students and arrange a table indicating the frequency of students having the same heights (the representation of height is given in inches). The table might be

| measured height | number of students |

| 60" | 3 |

| 62" | 2 |

| 64" | 4 |

| 66" | 3 |

| 68" | 5 |

| 70" | 3 |

Given this information, we might ask the question: what is the probability of randomly selecting a student who is 5'4" tall? This question is answered by computing the density function of the discrete probability associated with the observed information. In particular, we can define the density function in terms of the following table.

| height | density |

| 60" | 3/20 |

| 62" | 2/20 |

| 64" | 4/20 |

| 66" | 3/20 |

| 68" | 5/20 |

| 70" | 3/20 |

Suppose we define a subclass of DiscreteProbability which we name SampleSpace, and provide the above table as the value of an instance variable of SampleSpace. The response to the message density: x is the value associated with x in the table (in the example, the value of x is one of the possible heights); the value of the density of x, where x is not in the table, is 0. The probability of sampling each element of the collection is equally likely, so the density function is the reciprocal of the size of the collection. Since there may be several occurrences of a data element, the probability must be the appropriate sum of the probability for each occurrence. The implementation of the cumulative distribution function is inherited from the superclass.

| class name | SampleSpace |

| superclass | DiscreteProbability |

| instance variable names | data |

| class methods | instance creation

data: aCollection

↑self new setData: aCollection

|

| instance methods | probability functions

density: x

"x must be in the sample space; the probability must sum over all occurrences of x in the sample space"

(data includes: x)

ifTrue: [↑(data occurrencesOf: x) / data size]

ifFalse: [↑0]

private

inverseDistribution: x

↑data at: (x*data size) truncated + 1

setData: aCollection

data ← aCollection

|

Suppose heights is an instance of SampleSpace. The data is an array of 20 elements, the height of each student in the example.

heights ← SampleSpace data: #(60 60 60 62 62 64 64 64 64 66 66 66 68 68 68 68 68 70 70 70)

Then we can ask heights the question, what is the probability of randomly selecting a student with height 64, or what is the probability of randomly selecting a student whose height is between 60" and 64"? The answer to the first question is the density function, that is, the response to the message density: 64. The answer to the second is the cumulative distribution function; that is, the answer is the response to the message distribution: (60 to: 64 by: 2).

SampleSpace, in many ways, resembles a discrete uniform distribution. In general, a discrete uniform distribution is defined over a finite range of values. For example, we might specify a uniform distribution defined for six values: 1, 2, 3, 4, 5, 6, representing the sides of a die. The density function, as the constant 1/6, indicates that the die is "fair" i.e., the probability that each of the sides will be selected is the same.

We define four kinds of discrete probability distributions that are useful in simulation studies. They are Bernoulli, Binomial, Geometric, and Poisson. A Bernoulli distribution answers the question, will a success occur in the next trial? A binomial distribution represents N repeated, independent Bernoulli distributions, where N is greater than or equal to one. It answers the question, how many successes are there in N trials? Taking a slightly different point of view, the geometric distribution answers the question, how many repeated, independent Bernoulli trials are needed before the first success is obtained? A Poisson distribution is used to answer the question, how many events occur in a particular time interval? In particular, the Poisson determines the probability that K events will occur in a particular time interval, where K is greater than or equal to 0.

The Bernoulli Distribution

A Bernoulli distribution is used in the case of a sample space of two possibilities, each with a given probability of occurrence. Examples of sample spaces consisting of two possibilities are

- The throw of a die, in which I ask, did I get die side 4? The probability of success if the die is fair is 1/6; the probability of failure is 5/6.

- The toss of a coin, in which I ask, did I get heads? The probability of success if the coin is fair is 1/2; the probability of failure is 1/2.

- The draw of a playing card, in which I ask, is the playing card the queen of hearts? The probability of success if the card deck is standard is 1/52; the probability of failure is 51/52.

According to the specification of class Bernoulli, we create a Bernoulli distribution using expressions of the form

Bernoulli parameter: 0.17

In this example, we have created a Bernoulli distribution with a probability of success equal to 0.17. The probability of success is also referred to as the mean of the Bernoulli distribution.

The parameter, prob, of a Bernoulli distribution is the probability that one of the two possible outcomes will occur. This outcome is typically referred to as the successful one. The parameter prob is a number between 0.0 and 1.0. The density function maps the two possible outcomes, 1 or 0, onto the parameter prob or its inverse. The cumulative distribution, inherited from the superclass, can only return values prob or 1.

| class name | Bernoulli |

| superclass | DiscreteProbability |

| instance variable names | prob |

| class methods | instance creation

parameter: aNumber

(aNumber between: 0.0 and: 1.0)

ifTrue:[↑self new setParameter: aNumber]

ifFalse:[self error: 'The probability must be between 0.0 and 1.0']

|

| instance methods | accessing

mean

↑prob

variance

↑prob * (1.0 - prob)

probability functions

density: x

"let 1 denote success"

x = 1 ifTrue: [↑prob].

"let 0 denote failure"

x = 0 ifTrue: [↑1.0 - prob].

self error: 'outcomes of a Bernoulli can only be 1 or 0'

private

inverseDistribution: x

"Depending on the random variable x, the random sample is 1 or 0, denoting success or failure of the Bernoulli trial."

x < = prob

ifTrue: [↑1]

ifFalse: [↑0]

setParameter: aNumber

prob ← aNumber

|

Suppose, at some stage of playing a card game, we wish to determine whether or not the first draw of a card is an ace. Then a possible (randomly determined) answer is obtained by sampling from a Bernoulli distribution with mean of 4/52.

(Bernoulli parameter: 4/52) next

Let's trace how the response to the message next is carried out.

The method associated with the unary selector next is found in the method dictionary of class ProbabilityDistribution. The method returns the value of the expression self inverseDistribution: U next. That is, a uniformly distributed number between 0 and 1 is obtained (U next) in order to be the argument of inverseDistribution:. The method associated with the selector inverseDistribution: is found in the method dictionary of class Bernoulli. This is the inverse distribution function, a mapping from a value prob of the cumulative distribution function onto a value, x, such that prob is the probability that the random variable is less than or equal to x. In a Bernoulli distribution, x can only be one of two values; these are denoted by the integers 1 and 0.

The Binomial Distribution

In simulations, we use a Bernoulli distribution to tell us whether or not an event occurs, for example, does a car arrive in the next second or will a machine break down today? The binomial distribution answers how many successes occurred in N trials. The density function of a Bernoulli distribution tells us the probability of occurrence of one of two events. In contrast, the density function of a binomial answers the question, what is the probability that x successes will occur in the next N trials?

The binomial distribution represents N repeated, independent Bernoulli trials. It is the same as Bernoulli for N = 1. In the description of class Binomial, a subclass of class Bernoulli, the additional instance variable, N, represents the number of trials. That is, given an instance of Binomial, the response to the message next answers the question, how many successes are there in N trials?

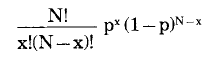

The probability function for the binomial is

where x is the number of successes and p is the probability of success on each trial. The notation "!" represents the mathematical factorial operation. The first terms can be reduced to computing the number of ways to obtain x successes out of N trials, divided by the number of ways to obtain x successes out of x trials. Thus the implementation given below makes use of the method computeSample: a outOf: b provided in the superclass ProbabilityDistribution.

| class name | Binomial |

| superclass | Bernoulli |

| instance variable names | N |

| class methods | instance creation

events: n mean: m

n truncated < = 0 ifTrue: [self error: 'number of events must be > 0'].

↑self new events: n mean: m

|

| instance methods | random sampling

next

| t |

"A surefire but slow method is to sample a Bernoulli N times. Since the Bernoulli returns 0 or 1, the sum will be between 0 and N."

t ← 0.

N timesRepeat: [t ← t + super next].

↑t

probability functions

density: x

(x between: 0 and: N)

ifTrue: [↑((self computeSample: x outOf:N) / (self computeSample: x outOf: x)) * (prob raisedTo: x) * ((1.0 - prob) raisedTo: N - x)]

ifFalse: [↑0.0]

private

events: n mean: m

N ← n truncated.

self setParameter: m/N

"setParameter: is implemented in my superclass"

|

Let's use flipping coins as our example. In five trials of a coin flip, where the probability of heads is 0.5, the Bernoulli distribution with parameter 0.5 represents one trial, i.e., one coin flip.

sampleA ← Bernoulli parameter: 0.5

The result of

sampleA next

is either 1 or 0, answering the question, did I get heads?

Suppose instead we create

sampleB ← Binomial events: 5 mean: 2.5

The result of

sampleB next

is a number between 0 and 5, answering the question, how many heads did I get in 5 trials? The message

sampleB density: 3

is a number between 0 and 1, answering the question, what is the probability of getting heads 3 times in 5 trials?

The Geometric Distribution

Suppose we wish to answer the question, how many repeated, independent Bernoulli trials are needed before the first success is obtained? This new perspective on a Bernoulli distribution is the geometric distribution. As in the Bernoulli and binomial cases, the probability of a success is between 0.0 and 1.0; the mean of the geometric is the reciprocal of the success probability. Thus if we create a geometric distribution as

Geometric mean: 5

then the mean is 5 and the probability of a success is 1/5. The mean must be greater than or equal to 1.

A geometric distribution is more suitable for an event-driven simulation design than a Bernoulli or binomial. Instead of asking how many cars arrive in the next 20 seconds (a binomial question), the geometric distribution asks, how many seconds before the next car arrives, in event-driven simulations, the (simulated) clock jumps to the time of the next event. Using a geometric distribution, we can determine when the next event will occur, set the clock accordingly, and then carry out the event, potentially "saving" a great deal of real time.

The probability distribution function is

where x is the number of trials required and p is the probability of success on a single trial.

| class name | Geometric |

| superclass | Bernoulli |

| class methods | instance creation

mean: m

↑self parameter: 1/m

"Note that the message parameter: is implemented in the superclass"

|

| instance methods | accessing

mean

↑1.0 / prob

variance

↑(1.0 - prob) / prob squared

probability functions

density: x

x > 0 ifTrue: [↑prob * ((1.0 - prob) raisedTo: x - 1)]

ifFalse: [↑0.0]

private

inverseDistribution: x

"Method is from Knuth, Vol. 2, pp. 116-117"

↑(x In / (1.0 - prob) in) ceiling

|

Suppose, on the average, two cars arrive at a ferry landing every minute. We can express this statistical information as

sample ← Geometric mean: 2/60

The density function can be used to answer the question, what is the probability that it will take N trials before the next success? For example, what is the probability that it will take 30 seconds before the next car arrives?

sample density: 30

The cumulative distribution function can be used to answer the question, did the next car arrive in 30 to 40 seconds?

sample distribution: (30 to: 40)

The Poisson Distribution

Suppose the question we wish to ask is, how many events occur in a unit time (or space interval)? The binomial distribution considers the occurrence of two independent events, such as drawing a king of hearts or a king of spades from a full deck of cards. There are random events, however, that occur at random points in time or space. These events do not occur as the outcomes of trials. In these circumstances, it does not make sense to consider the probability of an event happening or not happening. One does not ask how many cars did not arrive at a ferry or how many airplanes did not land at the airport; the appropriate questions are how many cars did arrive at the ferry and how many airplanes did land at the airport, in the next unit of time?

In simulations, the Poisson distribution is useful for sampling potential demands by customers for service, say, of cashiers, salesmen, technicians, or Xerox copiers. Experience has shown that the rate at which the service is provided often approximates a Poisson probability law.

The Poisson law describes the probability that exactly x events occur in a unit time interval, when the mean rate of occurrence per unit time is the variable mu. For a time interval of dt, the probability is mu*dt; mu must be greater than 0.0.

The probability function is

where a is the mean rate (or mu), e the base of natural logarithms, x is the number of occurrences, and ! the factorial notation.

| class name | Poisson |

| superclass | DiscreteProbability |

| instance variable names | mu |

| class methods | instance creation

mean: p

"p is the average number of events happening per unit interval."

p > 0.0

ifTrue: [↑self new setMean: p]

ifFalse: [self error: 'mean must be greater than 0.0']

|

| instance methods | accessing

mean

↑mu

variance

↑mu

random sampling

next

"how many events occur in the next unit interval?"

| p n q |

p ← mu negated exp.

n ← 0.

q ← 1.0.

[q ← q * U next.

q > = p]

whileTrue: [n ← n + 1].

↑n

probability functions

density: x

"the probability that in a unit interval, x events will occur"

x > = 0

ifTrue: [↑((mu raisedTo: x) * (mu negated exp)) / x factorial]

ifFalse: [↑0.0]

private

setMean: p

mu ← p

|

The response to the message next answers the question, how many events occur in the next unit of time or space? The density function of x determines the probability that, in a unit interval (of time or space), x events will occur. The cumulative distribution function of x determines the probability that, in a unit interval, x events or fewer will occur.

Continuous Probability Distributions

A continuous random variable can assume any value in an interval or collection of intervals. In the continuous case, questions similar to those asked in the discrete case are asked and the continuous probability distributions show strong correspondence to the discrete ones. An example of a question one asks of a continuous probability distribution is, what is the probability of obtaining a temperature at some moment of time. Temperature is a physical property which is measured on a continuous scale.

We define four kinds of continuous probability distributions; they are uniform, exponential, gamma, and normal distributions. The uniform distribution answers the question, given a set of equally likely events, which one occurs? Given that the underlying events are Poisson distributed, the exponential distribution is used to answer the question, how long before the first (next) event occurs? The gamma distribution is related in that it answers the question, how long before the Nth event occurs? The normal or Gaussian distribution is useful for approximating limiting forms of other distributions. It plays a significant role in statistics because it is simple to use; symmetrical about the mean; completely determined by two parameters, the mean and the variance; and reflects the distribution of everyday events.

The Uniform Distribution

We have already examined the uniform distribution from the perspective of selecting discrete elements from a finite sample space. The question we asked was, given a set of equally likely descriptions, which one to pick? In the continuous case, the sample space is a continuum, such as time or the interval between 0 and 1. The class Uniform provided here extends the capabilities of class Random by generating a random variable within any interval as a response to the message next.

| class name | Uniform |

| superclass | ContinuousProbability |

| instance variable names | startNumber stopNumber |

| class methods | instance creation

from: begin to: end

begin > end

ifTrue: [self error: 'illegal interval']

ifFalse: [↑self new setStart: begin toEnd: end]

|

| instance methods | accessing

mean

↑(startNumber + stopNumber)/2

variance

↑(stopNumber - startNumber) squared / 12

probability functions

density: x

(x between: startNumber and: stopNumber)

ifTrue: [↑1.0 / (stopNumber - startNumber)]

ifFalse: [↑0]

private

inverseDistribution: x

"x is a random number between 0 and 1"

↑startNumber + (x * (stopNumber - startNumber))

setStart: begin toEnd: end

startNumber ← begin.

stopNumber ← end

|

The Exponential Distribution

Given that the underlying events are Poisson distributed, the exponential distribution determines how long before the next event occurs. This is more suitable for a simulation design than is Poisson in the same sense that the geometric distribution was more suitable than the binomial, because we can jump the simulated clock setting to the next occurrence of an event, rather than stepping sequentially through each time unit.

As an example of sampling with an exponential, we might ask, when will the next car arrive? The density function of x is the probability that the next event will occur in the time interval x, for example, what is the probability of the next car arriving in the next 10 minutes?

Exponential is typically used in situations in which the sample deteriorates with time. For example, an exponential is used to determine the probability that a light bulb or a piece of electronic equipment will fail prior to some time x. Exponential is useful in these cases because the longer the piece of equipment is used, the less likely it is to keep running.

As in the case of a Poisson, the parameter of the exponential distribution, mu, is in terms of events per unit time, although the domain of this distribution is time (not events).

The probability function for the exponential distribution is

where a is the mean rate (mu = 1/a) between occurrences.

| class name | Exponential |

| superclass | ContinuousProbability |

| instance variable names | mu |

| class methods | instance creation

mean: p

"Since the exponential parameter mu is the same as Poisson mu, if we are given the mean of the exponential, we take reciprocal to get the probability parameter"

↑self parameter: 1.0/p

parameter: p

p > 0.0

ifTrue: [↑self new setParameter: p]

ifFalse: [self error: 'The probability parameter must be greater than 0.0']

|

| instance methods | accessing

mean

↑1.0/mu

variance

↑1.0/(mu * mu)

probability functions

density: x

x > 0.0

ifTrue: [↑mu * (mu * x) negated exp]

ifFalse: [↑0.0]

distribution: anInterval

anInterval stop < = 0.0

ifTrue: [↑0.0]

ifFalse: [↑1.0 - (mu * anInterval stop) negated exp -

(anInterval start > 0.0

ifTrue: [self distribution: (0.0 to: anInterval start)]

ifFalse: [0.0])]

private

inverseDistribution: x

"implementation according to Knuth, Vol. 2, p. 114"

↑x In negated / mu

setParameter: p

mu ← p

|

The Gamma Distribution

A distribution related to the exponential is gamma, which answers the question, how long before the Nth event occurs? For example, we use a gamma distribution to sample how long before the Nth car arrives at the ferry landing. Each instance of class Gamma represents an Nth event and the probability of occurrence of that Nth event (inherited from the superclass Exponential). The variable N specified in class Gamma must be a positive integer.

The probability function is

where k is greater than zero and the probability parameter mu is 1/a. The second term of the denominator, (k-1)!, is the gamma function when it is known that k is greater than 0. The implementation given below does not make this assumption.

| class name | Gamma |

| superclass | Exponential |

| instance variable names | N |

| class methods | instance creation

events: k mean : p

k ← k truncated.

k > 0

ifTrue: [↑(self parameter: k/p) setEvents: k]

ifFalse: [self error: 'the number of events must be greater than 0']

|

| instance methods | accessing

mean

↑super mean * N

variance

↑super variance * N

probability functions

density: x

| t |

x > 0.0

ifTrue: [t ← mu * x.

↑(mu raisedTo: N) / (self gamma: N) * (x raisedTo: N - 1) * t negated exp]

ifFalse: [↑0.0]

private

gamma: n

| t |

t ← n - 1.0.

↑self computeSample: t outOf: t

setEvents: events

N ← events

|

The Normal Distribution

The normal distribution, also called the Gaussian, is useful for summarizing or approximating other distributions. Using the normal distribution, we can ask questions such as how long before a success occurs (similar to the discrete binomial distribution) or how many events occur in a certain time interval (similar to the Poisson). Indeed, the normal can be used to approximate a binomial when the number of events is very large or a Poisson when the mean is large. However, the approximation is only accurate in the regions near the mean; the errors in approximation increase towards the tail.

A normal distribution is used when there is a central dominating value (the mean), and the probability of obtaining a value decreases with a deviation from the mean. If we plot a curve with the possible values on the x-axis and the probabilities on the y-axis, the curve will look like a bell shape. This bell shape is due to the requirements that the probabilities are symmetric about the mean, the possible values from the sample space are infinite, and yet the probabilities of all of these infinite values must sum to 1. The normal distribution is useful when determining the probability of a measurement, for example, when measuring the size of ball bearings. The measurements will result in values that cluster about a central mean value, off by a small amount.

The parameters of a normal distribution are the mean (mu) and a standard deviation (sigma). The standard deviation must be greater than 0. The probability function is

Where a is the parameter mu and b is the standard deviation sigma.

| class name | Normal |

| superclass | ContinuousProbability |

| instance variable names | mu sigma |

| class methods | instance creation

mean: a deviation: b

b > 0.0

ifTrue: [↑self new setMean: a standardDeviation: b]

ifFalse: [self error: 'standard deviation must be greater than 0.0']

|

| instance methods | accessing

mean

↑mu

variance

↑sigma squared

random sampling

next

"Polar method for normal deviates, Knuth vol. 2, pp. 104, 113"

| v1 v2 s rand u |

rand ← Uniform from: -1.0 to: 1.0.

[v1 ← rand next.

v2 ← rand next.

s ← v1 squared + v2 squared.

s > = 1] whileTrue.

u ← (-2.0 * s In / s) sqrt.

↑mu + (sigma * v1 * u)

probability functions

density: x

| twoPi t |

twoPi ← 2 * 3.1415926536.

t ← x - mu / sigma.

↑(-0.5 * t squared) exp / (sigma * twoPi sqrt)

private

setMean: m standardDeviation: s

mu ← m.

sigma ← s

|

In subsequent chapters, we define and provide examples of using class descriptions that support discrete, event-driven simulation. The probability distributions defined in the current chapter will be used throughout the example simulations.